Model Selection in Data Analysis: What are AIC and BIC?

One of the most important issues in data analysis is choosing the right model. Selecting the appropriate model and parameters for the data is not as easy as we think. Choosing the wrong model will cause the analysis time to be longer and the results to be inaccurate. So how do we choose the right model? Let's examine the AIC and BIC metrics together.

The AIC and BIC metrics are important metrics that jointly evaluate the complexity and the power of the model to represent the data. AIC and BIC consider the number of parameters when evaluating the complexity of the model. Therefore, these methods are used in parametric models. These techniques can be used in many areas such as time series analysis, forecasting studies, clustering and classification.

What is AIC?

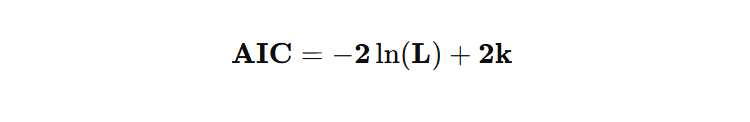

AIC (Akaike Information Criterion) is an important metric that simultaneously evaluates both the data explanation and the complexity of the model. The AIC value will become smaller as the model's rate of explaining the data increases and the complexity of the model decreases. Therefore, a lower AIC value represents a better model.

So what does it mean for the model to explain the data, and how to understand the complexity in the model? Let's explore these concepts!

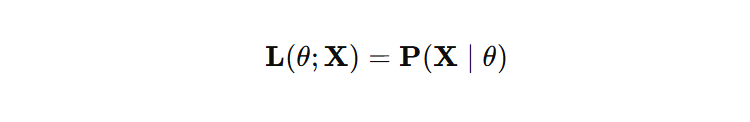

The level of explanation of the model is the probability of predicting the data with the selected parameters. The more meaningful and accurate parameters we choose, the better our model will represent the data. The probability of predicting the data with the selected parameters is called Likelihood (L) and is represented by the formula below.

The second part of the AIC formula is the complexity in the model. The complexity in the model is equal to the total number of free parameters and is denoted by k. For example, in a regression equation ax + by = 0, there are two free variables, a and b, which determine the structure of the model. Therefore, for our example model, k is equal to 2. As the number of unnecessary parameters in the model increases, the model will become more complex and lose its interpretation ability. For this reason, a high k value is to the disadvantage of the model when calculating AIC.

The most important issue to be considered when using the AIC metric is the problem of overfitting. The AIC metric penalizes unnecessary parameters less. Due to this feature, it focuses not on the model but on the model's ability to represent the data. A model that has lost its ability to interpret the data (overfitting) will also represent the data well, so the AIC metric can select an overfitting model as a better model. This should be taken into account for the accuracy of the analysis results.

The AIC formula consists of L and k values as follows.

What is BIC?

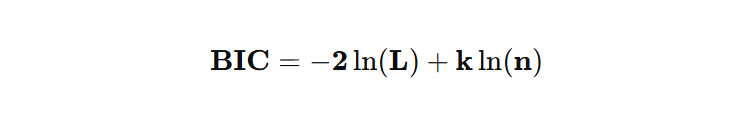

The BIC (Bayesian Information Criterion) metric has the same logic as the AIC metric. The important difference in the BIC metric is that the complexity (k) in the model is penalized more. The total number of free parameters is multiplied by log(n) and added to the formula. The n value here represents the number of data point. As the number of data increases, the BIC value also increases. For this reason, models with more unnecessary parameters are penalized with the increase in the number of data. Thanks to this feature, the BIC metric reduces the possibility of encountering the problem of overfitting by selecting simpler models.

The formula for the BIC metric is as follows.

AIC and BIC: Which Should We Prefer?

The most important difference between these two metrics is the amount of penalization for model complexity. If we are going to compare models with different parameter numbers and we do not want to penalize this situation too much, the AIC metric should be preferred. Thanks to this feature, the AIC metric provides flexibility in model selection. In addition, complex data may require more detailed and complex models. Since AIC penalizes the complexity of the model less, it makes it easier to select the appropriate model for such data.

The BIC metric is preferred more often when working with models with fewer parameters and non-complex models. Since the BIC metric is sensitive to the number of data, this metric becomes extra important in large data sets. The BIC metric also copes better with the problem of overfitting. The BIC metric should be preferred when variability and uncertainty are high.

If you want to be informed about new works and similar content, you can follow me on the accounts below.

Linkedin: www.linkedin.com/in/mustafabayhan/

Medium: medium.com/@bayhanmustafa